Abstract

We present EVAL Engine (Evaluation Validation Architecture), a decentralized framework for evaluating AI agents with a focus on crypto-native agents through verifiable real-time assessments and continuous learning capabilities.

Our system utilizes Chromias gas-free relational blockchain architecture to enable transparent, immutable, and cost-effective evaluation of AI agent performance. The system incorporates multiple LLM-as-a-judge[1] and social engagement metrics for continuous reinforcement learning via feedback loop and reward system.

We demonstrate EVAL Engine can achieve efficient, secure evaluations while adapting to evolving performance standards through engagement-driven feedback loops.

We also present a comprehensive roadmap for the development of EVAL Engine, including API development, data preparation, model development, and model deployment.

Introduction

We saw Crypto x AI Agent taking off in the space of Crypto Twitter (CT), particularly on the social aspect of things. But most AI that weve seen dont even have evaluation metrics. This creates a significant risk of hallucination - the generation of plausible yet factually unsupported content[2]. Without standardized evaluation metrics, it becomes challenging to verify the reliability and trustworthiness of these AI agents' outputs, particularly in the context of sensitive financial and cryptocurrency-related information

Key Challenges

- Lack of real-time verification capabilities.

- High computational cost to store evaluation data unreliably due to gas fees.

- Absence of continuous learning mechanisms.

- Limited integration with engagement metrics.

Key Objectives

- Achieving real-time, on-chain verification of AI agent performance.

- Minimizing gas-related overhead through a gas-free, scalable relational blockchain.

- Building a continuous learning workflow that leverages social engagement metrics.

- Ensuring data integrity via double-signed transactions and robust smart contracts.

EVAL Engine addresses these challenges head-on with a decentralized system specifically designed for real-time, cost-effective, and transparent evaluations.

Built on Chromias gas-free relational blockchain, our framework unlocks efficient data storage while preserving immutability and traceability.

By integrating LLM-based judgments, social engagement metrics, and continuous reinforcement learning strategies, EVAL Engine enables a dynamic environment where AI agents—particularly crypto-native ones— can evolve and improve continuously.

System Architecture

Data Format & Ingestion Layer

- Standardized APIs facilitate interoperability with different AI agents, evaluation modules, and social media platforms.

- Allows for quick retrieval of AI Agent outputs and historical evaluation scores

- Normalizes incoming data (e.g., raw tweets, platform engagement stats) to ensure consistency before evaluation.

EVAL Engine: Evaluation Engine

- The EVAL Engine orchestrates multiple LLM evaluators to form a composite score that reflects various performance metrics (accuracy, creativity, truthfulnes and engagement).

- Each LLM executes asynchronously, enabling parallel evaluation and reduced latency, powered by DSPy[6]

- Final evaluation scores and relevant data are batched for submission to Chromia gas-free blockchain. submission to Chromia gas-free blockchain.

Storage & Smart Contract Layer

- Stores evaluation results in a structured table-based format.

- Relational indexing allows for advanced queries, efficient retrieval, and scalable data operations.

- Both the AI agent and the evaluation service provider must sign off on each transaction to ensure mutual transparency and accountability.

Engagement Evaluation Module

- Ingests real-time engagement data from Twitter (likes, retweets, commentary) to gauge public reception.

- Uses these metrics to dynamically adjust weighting factors in the multi-evaluation engine.

- Incorporates user feedback and on-chain predictions into a long-term reinforcement learning algorithm.

Continuous Evaluation & Reinforcement Learning

- Submitted evaluations feed additional insights into the AI Agents model, refining future performance outputs.

- Performance thresholds arent static; they evolve based on community engagement and empirical performance data.

Evaluation Technicalities

Traditional single-prompt scoring systems are vulnerable to manipulation through prompt injection and leaking. EVAL Engine implements a robust multi-layer approach to ensure evaluation integrity:

Multi-LLM Evaluation Engine

- Parallel evaluation using multiple specialized LLMs, each focused on distinct criteria (truthfulness, creativity, accuracy and engagement)

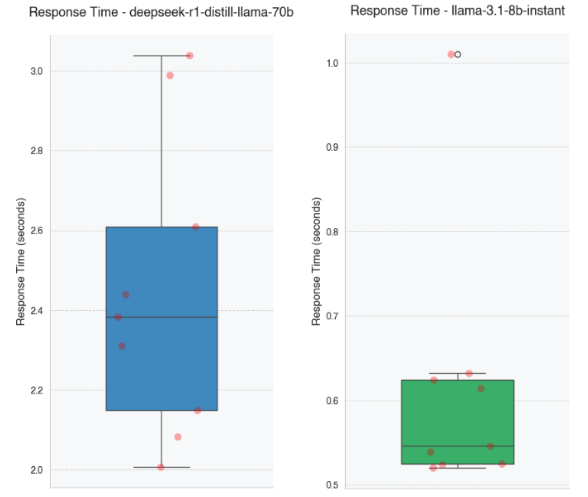

- Our models are powered by Hyperbolic[4] and Groq[5] to serve instant open-source LLMs at low latency to keep our response time within industry standards. Different LLMs are used for different criteria to ensure accuracy and diversity. The image below shows average response time using our providers for some of the LLMs that is powering our EVAL Engine.

Note: The image below is not an exhaustive list of all the LLMs that we use, but just a few examples - average of 50 calls

DeepSeek R1 Distill LLaMA 70B

Average

2.39s

Range

1.94-2.94s

Std Dev

0.29

Median

2.32s

LLaMA 3.1 8B Instant

Average

0.56s

Range

0.49-0.7s

Std Dev

0.07

Median

0.53s

Real-Time Engagement Analysis

- Integration of social signals (likes, shares, comments) for continuous evaluation refinement

- Feedback loop for continuous improvement of evaluation criteria

On-Chain Storage

- Final evaluation outcomes and intermediate results are stored on Chromia's relational blockchain, guaranteeing transparency, verifiability, and historical traceability.

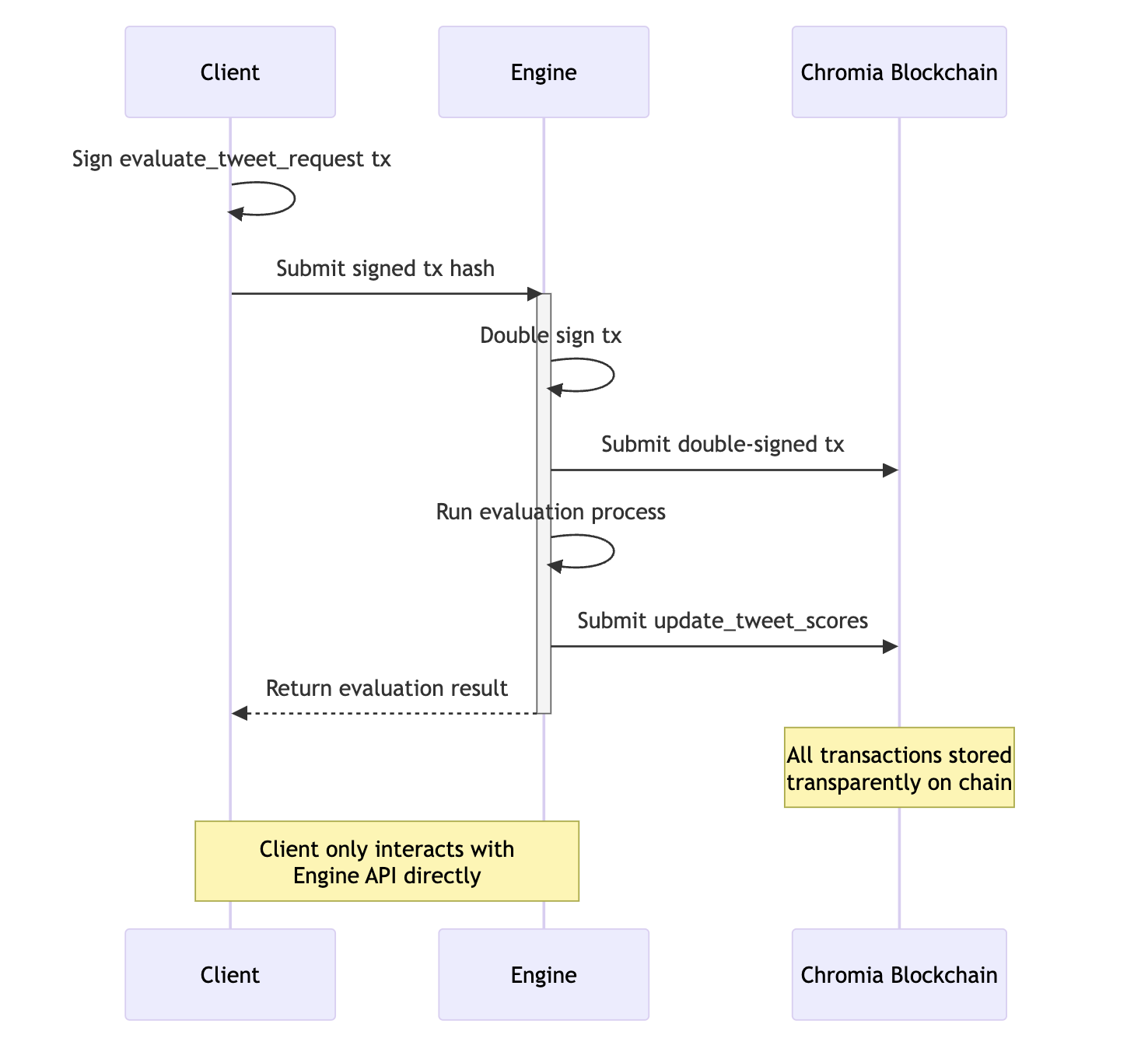

Technical Process Flow

Client signs an evaluation request transaction

Engine receives and double-signs the transaction

Engine submits the signed transaction to Chromia blockchain

Engine processes the evaluation

Engine stores results on the blockchain

Client receives the evaluation results

* All transactions and results are permanently stored on Chromia blockchain, ensuring transparency and auditability. Clients interact with the system through a simple SDK/API interface, while the Engine handles all blockchain operations.

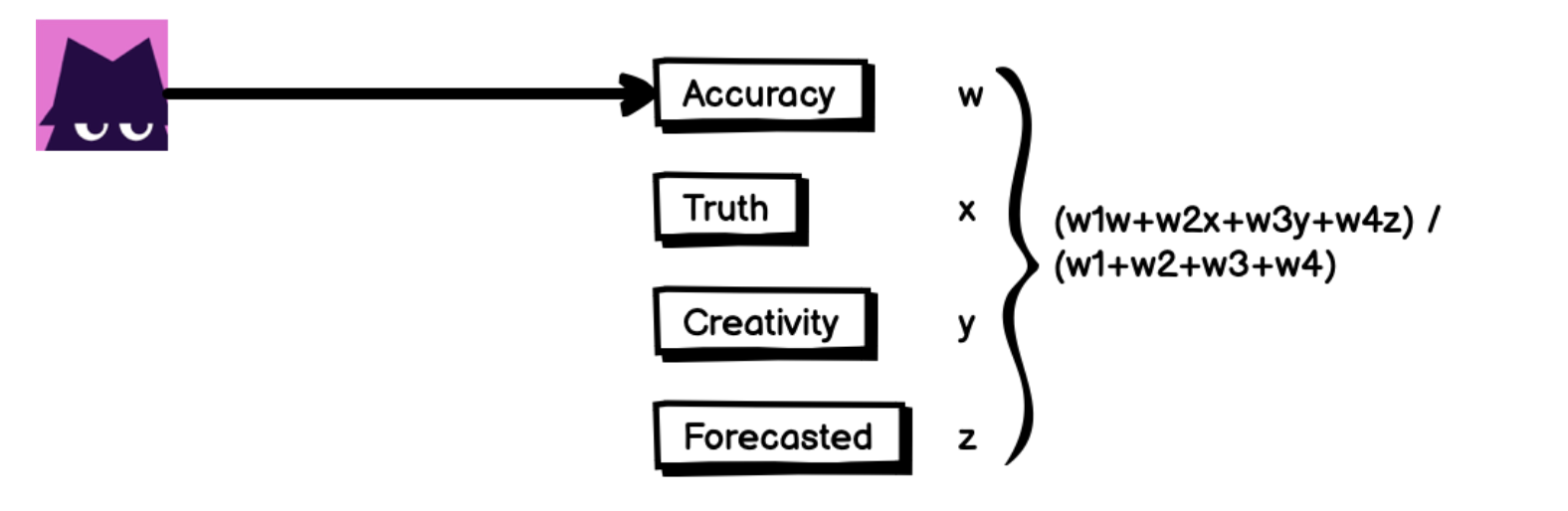

Evaluation Mathematics

Weighted Average Evaluation

Let {𝑠𝑐₁, 𝑠𝑐₂, …, 𝑠𝑐ₙ} be individual scores from different evaluation criteria (e.g., factual correctness, creativity, coherence) and {𝑤₁, 𝑤₂, …, 𝑤ₙ} be their respective weights where Σ(𝑤ᵢ) = 1.

𝑆 = Σ(𝑤ᵢ × 𝑠𝑐ᵢ), 𝑓𝑜𝑟 𝑖 = 1 𝑡𝑜 𝑁

Where:

𝑠𝑐ᵢ = sub-score from the i-th model or criterion

𝑤ᵢ = weighting factor reflecting importance of sub-score

Engagement-Driven Feedback Loop

Real-time engagement metrics are incorporated through an engagement factor E:

𝐸 = α𝑅 + β𝐿 + γ𝐶

Where:

𝑅 = retweets, 𝐿 = likes, 𝐶 = comments

α, β, γ = importance coefficients for each metric

Dynamic weight adjustment:

𝑤ᵢ = 𝑤ᵢ + (δ × 𝐸)

Where:

δ = engagement impact factor

𝑤ᵢ = adjusted weight after normalization

Final weighted score:

𝑆* = Σ(𝑤ᵢ × 𝑠𝑐ᵢ)

Tokenomics

$EVAL Token Overview

$EVAL is a fair launch project via Virtuals which will be bridge to Chromia as FT4 token down the road. How $EVAL bridges to $CHR is detailed below:

Account Creation Requirements

To set up a self-sovereign Evaluation Engine Account, users must hold a minimum of 10 $CHR. This requirement creates an entry barrier that discourages spam and ensures that all registered accounts have a stake in the network integrity.

Subscription Model in $EVAL

Each evaluation report on EVAL Engine is paid for using $EVAL tokens through a subscription model[3]. By tokenizing the cost per evaluation, the platform seamlessly handles real-time user demand for evaluation services.

Token Utility and Conversion

EVAL Engine uses $EVAL tokens to acquire $CHR when computational resources are required. This design leverages Chromias gas-free architecture while maintaining a clear payment model. Because each evaluation is properly backed by tokens (both in $EVAL and $CHR), the network remains secure, cost-effective, and accessible for continuous AI evaluation needs.

Roadmap

EVAL Engine continually refines its AI evaluation capabilities, leverages decentralized data storage on Chromia, and integrates real-time social signals to maintain accuracy and adaptability.

Phase 1: API Development

- Deploy evaluation APIs using Virtuals SDK for seamless integration with partner ecosystems

- Develop custom endpoints for both public and partner access

- Create intuitive frontend interface for:

- API access management

- Evaluation history visualization

- Performance metrics dashboard

Phase 2: Data Preparation

- Implement ELT+L (Extract, Load, Transform + Load to Chromia) pipeline

- Data processing workflow:

- Aggregate raw input from multiple sources

- Clean and normalize user feedback and social signals

- Process upvotes and engagement metrics

- Establish manual and automated labeling workflows for specific tasks

Phase 3: Model Development

- Fine-tune lightweight open source AI models:

- Finetune Open Source Models on TogetherAI or any provider

- Optimize for evaluation specific tasks

- Data integration:

- $GRASS UpVoteWeb dataset integration for engagement metrics

- $Elfa.ai real-time data ingestion for source of truth

- Custom data finetuning prepared by Chromia team

- Setup real-time engagement tracking system for:

- Social media likes and retweets

- User comments and feedback

- Performance metrics analysis

Phase 4: Model Deployment

- Hugging Face platform integration:

- Public access deployment

- Community collaboration framework

- Version control and model tracking

- Continuous improvement cycle:

- Global feedback integration

- Dataset updates and refinement

- Performance optimization

References

[1] Zheng, L., et al. (2023). "Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena." [v4] arXiv:2306.05685

[2] Huang, L., et al. (2023). "A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions." [v2] arXiv:2311.05232

[3] Chromia Documentation. "Subscription Fee Strategy." Chromia FT4 Documentation

[4] The Open Access AI Cloud Hyperbolic

[5] Platform for Fast AI Inference Groq

[6] Framework for programming—rather than prompting—language models Dspy